11.2. How to Conduct Streaming Replication

Streaming replication has two aspects: log shipping and database synchronization. Log shipping is the main aspect of streaming replication, as the primary server sends WAL data to the connected standby servers whenever they are written. Database synchronization is required for synchronous replication, where the primary server communicates with each standby server to synchronize their database clusters.

To accurately understand how streaming replication works, we need to understand how one primary server manages multiple standby servers. We will start with the simple case (i.e., single-primary single-standby system) in this section, and then discuss the general case (single-primary multi-standby system) in the next section.

11.2.1. Communication Between a Primary and a Synchronous Standby

Assume that the standby server is in the synchronous replication mode, but the configuration parameter hot_standby is disabled and wal_level is ‘replica’. The main parameter of the primary server is shown below:

synchronous_standby_names = 'standby1'

hot_standby = off

wal_level = replicaAdditionally, among the three triggers to write the WAL data mentioned in Section 9.5, we focus on the transaction commits here.

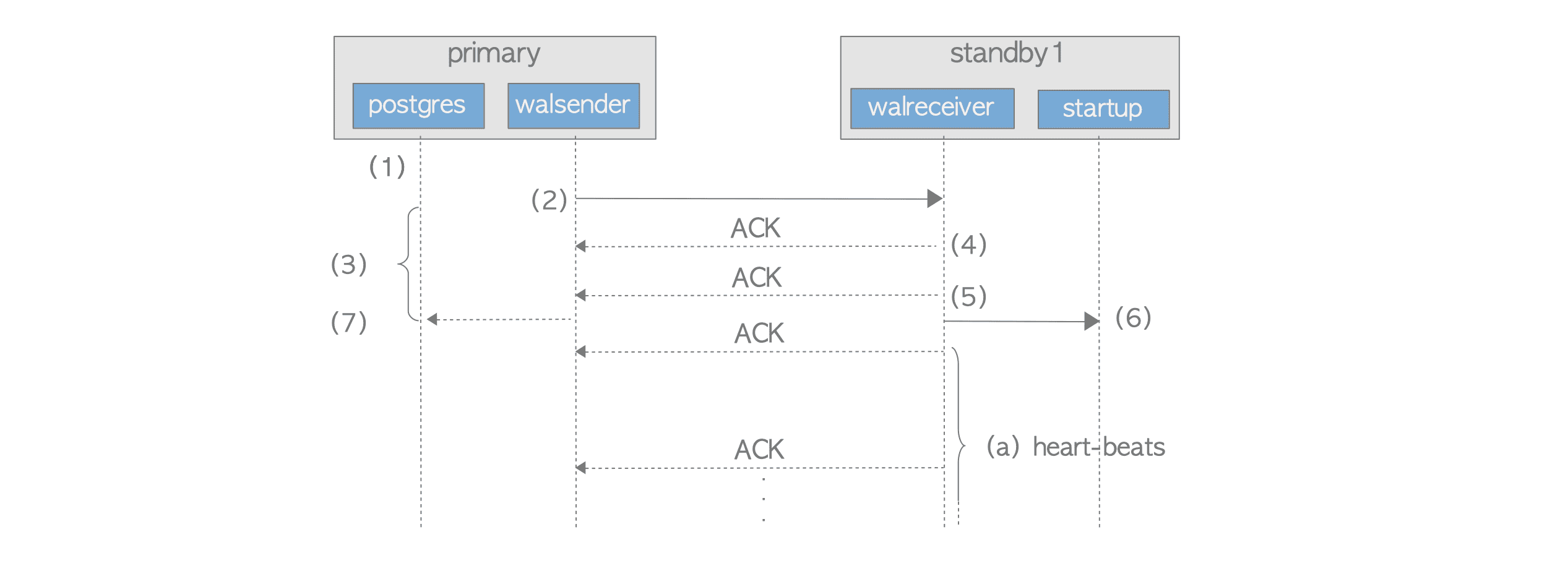

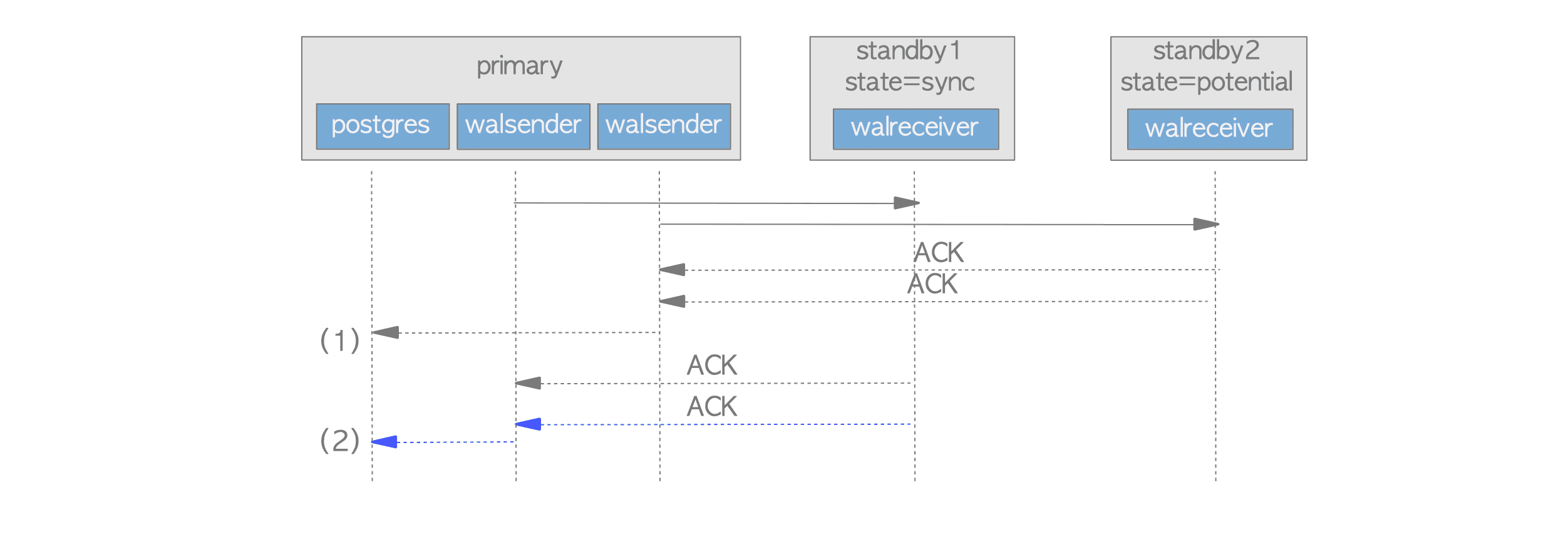

Suppose that one backend process on the primary server issues a simple INSERT statement in autocommit mode. The backend starts a transaction, issues an INSERT statement, and then commits the transaction immediately. Let’s explore further how this commit action will be completed. See the following sequence diagram in Figure 11.2:

Figure 11.2. Streaming Replication's communication sequence diagram.

-

The backend process writes and flushes WAL data to a WAL segment file by executing the functions XLogInsert() and XLogFlush().

-

The walsender process sends the WAL data written into the WAL segment to the walreceiver process.

-

After sending the WAL data, the backend process continues to wait for an ACK response from the standby server. More precisely, the backend process gets a latch by executing the internal function SyncRepWaitForLSN(), and waits for it to be released.

-

The walreceiver on the standby server writes the received WAL data into the standby’s WAL segment using the write() system call, and returns an ACK response to the walsender.

-

The walreceiver flushes the WAL data to the WAL segment using the system call such as fsync(), returns another ACK response to the walsender, and informs the startup process about WAL data updated.

-

The startup process replays the WAL data, which has been written to the WAL segment.

-

The walsender releases the latch of the backend process on receiving the ACK response from the walreceiver, and then, the backend process’s commit or abort action will be completed. The timing for latch-release depends on the parameter synchronous_commit.

It is ‘on’ (default), the latch is released when the ACK of step (5) received, whereas it is ‘remote_write’, the latch is released when the ACK of step (4) is received.

Each ACK response informs the primary server of the internal information of standby server. It contains four items below:

- The LSN location where the latest WAL data has been written.

- The LSN location where the latest WAL data has been flushed.

- The LSN location where the latest WAL data has been replayed in the startup process.

- The timestamp when this response has be sent.

The walreceiver returns ACK responses not only when WAL data have been written and flushed, but also periodically as a heartbeat from the standby server. The primary server therefore always has an accurate understanding of the status of all connected standby servers.

The LSN-related information of the connected standby servers can be displayed by issuing the queries shown below:

testdb=# SELECT application_name AS host,

write_location AS write_LSN, flush_location AS flush_LSN,

replay_location AS replay_LSN FROM pg_stat_replication;

host | write_lsn | flush_lsn | replay_lsn

----------+-----------+-----------+------------

standby1 | 0/5000280 | 0/5000280 | 0/5000280

standby2 | 0/5000280 | 0/5000280 | 0/5000280

(2 rows)The heartbeat interval is set to the parameter wal_receiver_status_interval, which is 10 seconds by default.

11.2.2. Detecting Failures of Standby Servers

Streaming replication uses two common failure detection procedures that do not require any special hardware.

-

Failure detection of standby server process:

- When a connection drop between the walsender and walreceiver is detected, the primary server immediately determines that the standby server or walreceiver process is faulty.

- When a low-level network function returns an error by failing to write or read the socket interface of the walreceiver, the primary server also immediately determines its failure.

-

Failure detection of hardware and networks:

- If a walreceiver does not return anything within the time set for the parameter wal_sender_timeout (default 60 seconds), the primary server determines that the standby server is faulty.

- In contrast to the failure described above, it takes a certain amount of time, up to wal_sender_timeout seconds, to confirm the standby’s death on the primary server even if a standby server is no longer able to send any response due to some failures (e.g., standby server’s hardware failure, network failure, etc.).

Depending on the type of failure, it can usually be detected immediately after the failure occurs. However, there may be a time lag between the occurrence of the failure and its detection. In particular, if the latter type of failure occurs in a synchronous standby server, all transaction processing on the primary server will be stopped until the failure of the standby is detected, even if multiple potential standby servers may have been working.

11.2.3. Behavior When a Failure Occurs

This subsection describes how the primary server behaves when a synchronous standby server fails, and how to deal with the situation.

Even if a synchronous standby server fails and is no longer able to return an ACK response, the primary server will continue to wait for responses forever. This means that running transactions cannot commit and subsequent query processing cannot be started. In other words, all primary server operations are effectively stopped. (Streaming replication does not support a function to automatically revert to asynchronous mode after a timeout.)

There are two ways to avoid such situation. One is to use multiple standby servers to increase system availability. The other is to manually switch from synchronous to asynchronous mode by performing the following steps:

-

Set the parameter synchronous_standby_names to an empty string.

synchronous_standby_names = '' -

Execute the pg_ctl command with reload option.

$ pg_ctl -D $PGDATA reload

This procedure does not affect connected clients. The primary server will continue to process transactions and all sessions between clients and their respective backend processes will be maintained.

11.2.4. Conflicts

In streaming replication, standbys can execute SELECT commands independently of the primary server. However, conflicts can arise between the standby and the primary server under certain conditions, potentially leading to errors.

Figure 11.3 shows a typical example: the primary server drops the table that the standby server is selecting.

Figure 11.3. Conflict caused by DROP TABLE.

- The standby server selects a table.

- The primary server drops the table that the standby server is currently selecting.

- The primary server sends XLOG records related to the DROP TABLE command.

- The standby server suspends replaying the WAL data for the DROP TABLE command for 30 seconds by default (configurable with max_standby_archive_delay or max_standby_streaming_delay).

- If the conflict is not resolved within the time specified by these parameters (i.e., the SELECT command is not completed), the SELECT command will return an error and terminate.

- Primary

testdb=# -- Primary

testdb=# DROP TABLE tbl;

DROP TABLE

testdb=#- Standby

testdb=# -- Standby

testdb=# SELECT count(*) FROM tbl;

ERROR: canceling statement due to conflict with recovery

DETAIL: User was holding a relation lock for too long.The cause of this conflict is the WAL data generated by the Access Exclusive Lock acquired internally by the DROP TABLE command on the primary1, which conflicts with the standby’s SELECT command. (As described in Section 9.5.1, WAL data includes not only changes to data but also lock information.)

According to the official document, the causes of conflicts can be classified into the following three types:

- Access Exclusive Locks on the primary server: These conflict with any lock on the standby. Refer to the official document for a list of commands that acquire Access Exclusive Locks, including LOCK IN ACCESS EXCLUSIVE MODE, DROP TABLE, TRUNCATE, REINDEX, VACUUM FULL, etc.

- Dropping databases or tablespaces.

- Applying a vacuum cleanup record from WAL: If standby transactions can still see rows being removed or if queries are accessing the affected page.

Here is another example: the primary server deletes rows and performs the VACUUM command on a table being selected by the standby.

- Primary

testdb=# -- Primary

testdb=# DELETE FROM FROM tbl

testdb-# WHERE data > 100000;

DELETE 1050

testdb=# VACUUM tbl;

VACUUM

testdb=#- Standby

testdb=# -- Standby

testdb=# SELECT count(*) FROM tbl;

ERROR: canceling statement due to conflict with recovery

DETAIL: User query might have needed to see row versions that must be removed.Conflicts caused by VACUUM processing are particularly troublesome because they can occur not only during explicitly run VACUUM commands but also during autovacuum operations, which is described in Section 6.5.

11.2.4.1. Mitigating Conflicts Caused by Locking

On standby servers, conflicts can be mitigated by increasing the values of max_standby_archive_delay and max_standby_streaming_delay (default: 30 seconds). These settings allow the standby to delay replaying WAL data, reducing the likelihood of conflicts.

However, these parameters cannot always eliminate conflicts.

Additionally, they affect other PostgreSQL backends on the standby, preventing them from accessing the latest data during the delay. This means the standby server is not fully synchronous during such conflicts.

It is essential to configure these parameters while carefully considering the disadvantages of the settings and the operational trade-offs.

11.2.4.2. Avoiding Conflicts Caused by Vacuum Processing

On the primary server,

by setting the parameter hot_standby_feedback (default: off) to on,

the primary delays deleting data that the standby needs during vacuum processing,

based on the standby’s state. This helps avoid conflicts caused by vacuum operations.

The standby’s state is sent to the primary at intervals defined by wal_receiver_status_interval (default: 10 seconds).

While enabling hot_standby_feedback resolves vacuum-induced conflicts, it has the following drawbacks for the primary server:

-

Table and Index Bloat: Retaining old tuples visible to the standby prevents vacuuming, leading to increased table and index bloat on the primary.

-

WAL Retention Issues: Retaining additional data for standby consistency can increase WAL usage.

Issuing the pg_stat_database_conflicts on a standby server displays the causes and number of conflicts:

testdb=# -- Standby

testdb=# \x

Expanded display is on.

testdb=# SELECT * FROM pg_stat_database_conflicts WHERE datname = 'testdb';

-[ RECORD 1 ]------------+-------

datid | 16384

datname | testdb

confl_tablespace | 0

confl_lock | 1

confl_snapshot | 1

confl_bufferpin | 0

confl_deadlock | 0

confl_active_logicalslot | 0Until version 15, the vacuum_defer_cleanup_age parameter was supported to defer the deletion of dead tuples during a vacuum operation. If a positive number was set, vacuum operations would defer deleting the dead tuples for the specified number of transactions.

This parameter was removed in version 16 because it could not always eliminate conflicts, and using hot_standby_feedback and Replication Slots, described in Section 11.4, could manage conflicts more effectively.

-

More precisely, in situations where the primary drops objects that the standby is accessing, streaming replication is designed to transition to a conflict state. This serves as a grace period to prevent the standby from suddenly returning an error.

As part of this design, the acquisition of Access Exclusive Locks is recorded as XLOG records. Note that Access Exclusive Locks do not generate XLOG records if wal_level is set to minimal. That is, XLOG records for these locks are required for replication, not for ordinary recovery.

Therefore, this type of conflict is not caused accidentally by the Access Exclusive Lock itself but is intentionally introduced to avoid sudden errors on the standby. ↩︎