9.1. Overview

Let’s take a look at the overview of the WAL mechanism. To clarify the issue that WAL solves, the first subsection shows what happens when a crash occurs if PostgreSQL does not implement WAL. The second subsection introduces some key concepts and shows an overview of the main subjects in this chapter: the writing of WAL data and the database recovery process. The final subsection completes the overview of WAL by adding one more key concept.

In this section, to simplify the description, the table TABLE_A which contains just one page has been used.

9.1.1. Insertion Operations without WAL

As described in Chapter 8, every DBMS implements a shared buffer pool to provide efficient access to the relation’s pages.

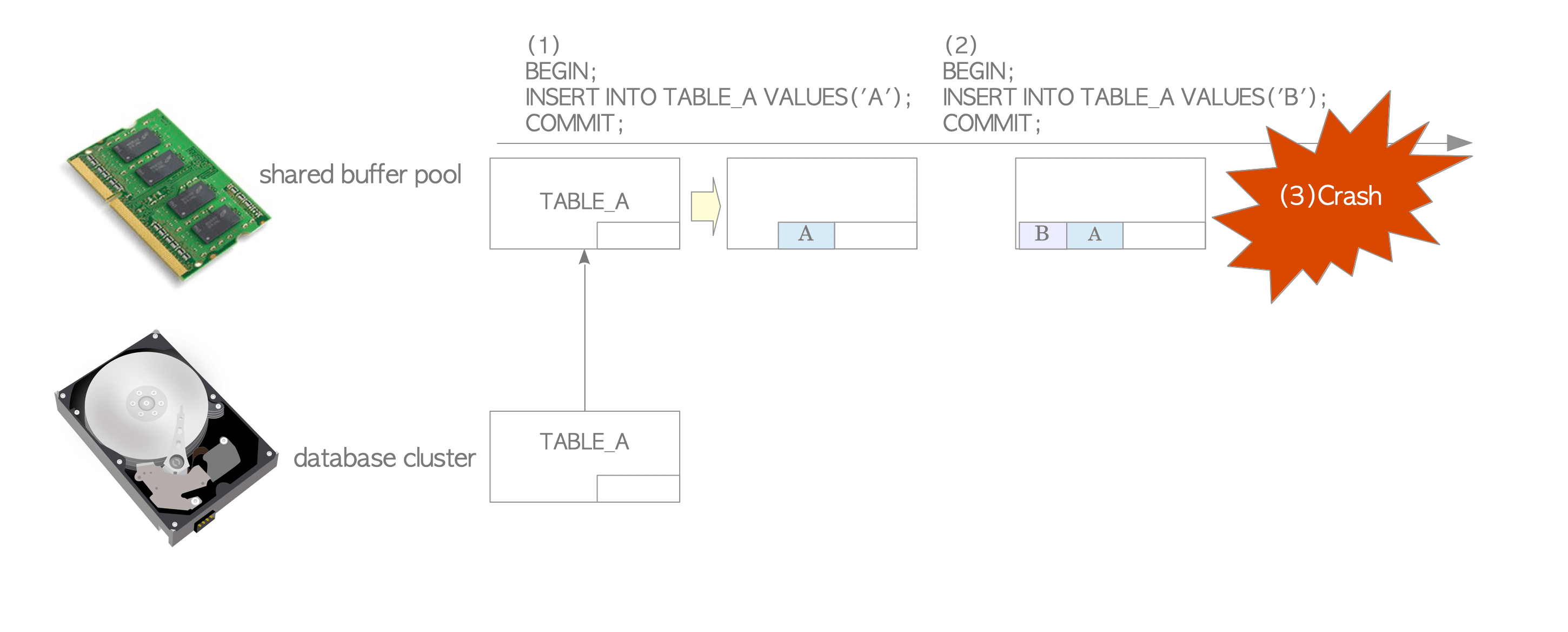

Assume that we insert some data tuples into TABLE_A on PostgreSQL which does not implement the WAL feature. This situation is illustrated in Figure 9.1.

Figure 9.1. Insertion operations without WAL.

-

When we issue the first INSERT statement, PostgreSQL loads the TABLE_A page from the database cluster into the in-memory shared buffer pool and inserts a tuple into the page. The page is not written to the database cluster immediately. (As mentioned in Chapter 8, modified pages are generally called dirty pages.)

-

When we issue the second INSERT statement, PostgreSQL inserts a new tuple into the page in the buffer pool. The page has not been written to storage yet.

-

If the operating system or PostgreSQL server should fail for any reason, such as a power failure, all of the inserted data would be lost.

Therefore, a database without WAL is vulnerable to system failures.

Before WAL was introduced (versions 7.0 or earlier), PostgreSQL did synchronous writes to the disk by issuing a sync system call whenever a page was changed in memory in order to ensure durability. This made modification commands such as INSERT and UPDATE very poor-performing.

9.1.2. Insertion Operations and Database Recovery

To deal with the system failures mentioned above without compromising performance, PostgreSQL supports WAL. In this subsection, some keywords and key concepts are described, followed by the writing of WAL data and the recovery of the database.

PostgreSQL writes all modifications as history data into a persistent storage to prepare for failures. In PostgreSQL, the history data are known as XLOG record(s) or WAL data.

XLOG records are written into the in-memory WAL buffer by change operations such as insertion, deletion, or commit action. They are immediately written into a WAL segment file on the storage when a transaction commits or aborts. (To be precise, the writing of XLOG records may occur in other cases. The details will be described in Section 9.5.) The LSN (Log Sequence Number) of an XLOG record represents the location where its record is written on the transaction log. The LSN of a record is used as the unique id of the XLOG record.

When considering how a database system recovers, one question that may arise is: what point does PostgreSQL start to recover from? The answer is the REDO point. That is the location to write the XLOG record at the moment when the latest checkpoint is started. (Checkpoints in PostgreSQL are described in Section 9.7.) In fact, the database recovery process is closely linked to the checkpoint process, and both of these processes are inseparable.

The WAL and checkpoint process were implemented at the same time in version 7.1.

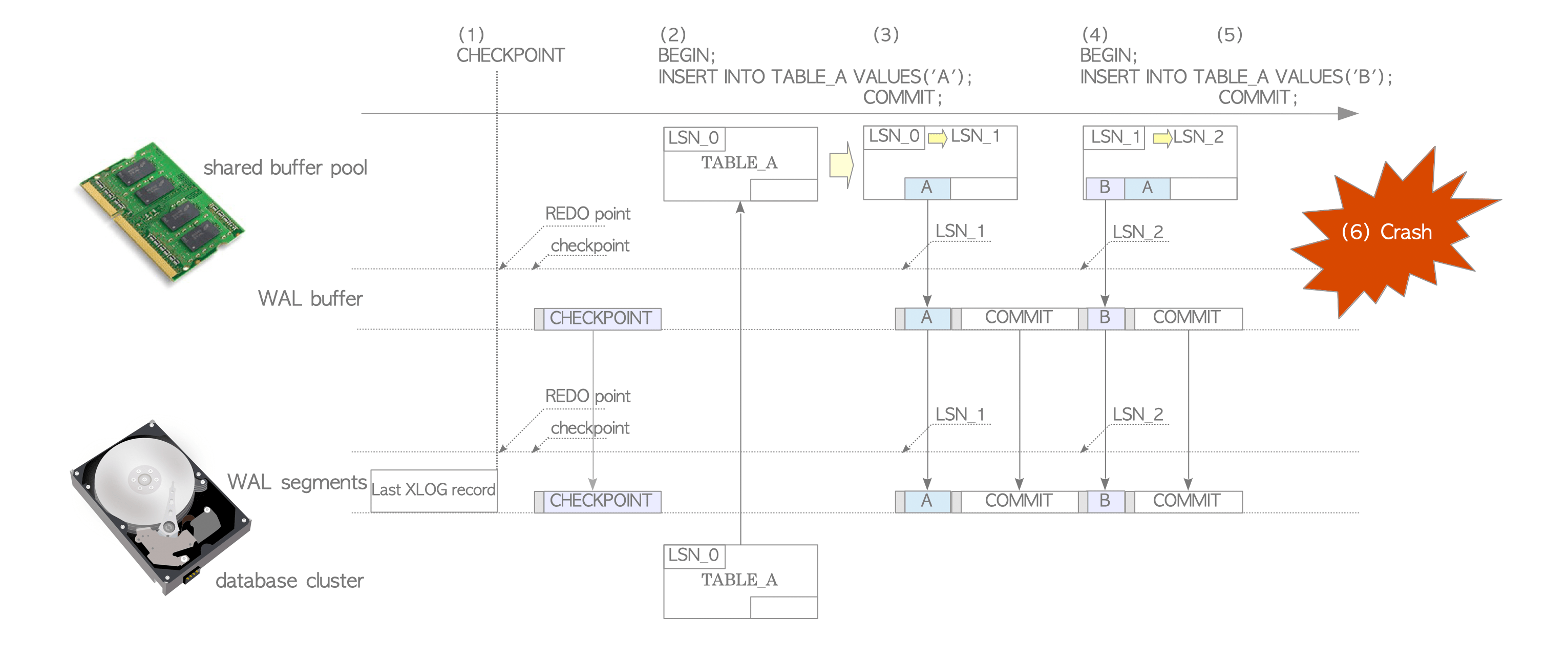

As the introduction of major keywords and concepts has just finished, the following is a description of the tuple insertion with WAL. See Figure 9.2 and the following description.

Figure 9.2. Insertion operations with WAL.

TABLE_A’s LSN shows the value of ‘pd_lsn’ within the page-header of TABLE_A.

Page’s LSN is the same manner.

-

A checkpointer, a background process, periodically performs checkpointing. Whenever the checkpointer starts, it writes a XLOG record called checkpoint record to the current WAL segment. This record contains the location of the latest REDO point.

-

When we issue the first INSERT statement, PostgreSQL loads the TABLE_A page into the shared buffer pool, inserts a tuple into the page, creates and writes a XLOG record of this statement into the WAL buffer at the location LSN_1, and updates the TABLE_A’s LSN from LSN_0 to LSN_1.

In this example, this XLOG record is a pair of a header-data and the tuple entire. -

As this transaction commits, PostgreSQL creates and writes a XLOG record of this commit action into the WAL buffer, and then, writes and flushes all XLOG records on the WAL buffer to the WAL segment file, from LSN_1.

-

When we issue the second INSERT statement, PostgreSQL inserts a new tuple into the page, creates and writes this tuple’s XLOG record to the WAL buffer at LSN_2, and updates the TABLE_A’s LSN from LSN_1 to LSN_2.

-

When this statement’s transaction commits, PostgreSQL operates in the same manner as in step (3).

-

Imagine that an operating system failure occurs. Even though all of the data in the shared buffer pool is lost, all modifications to the page have been written to the WAL segment files as history data.

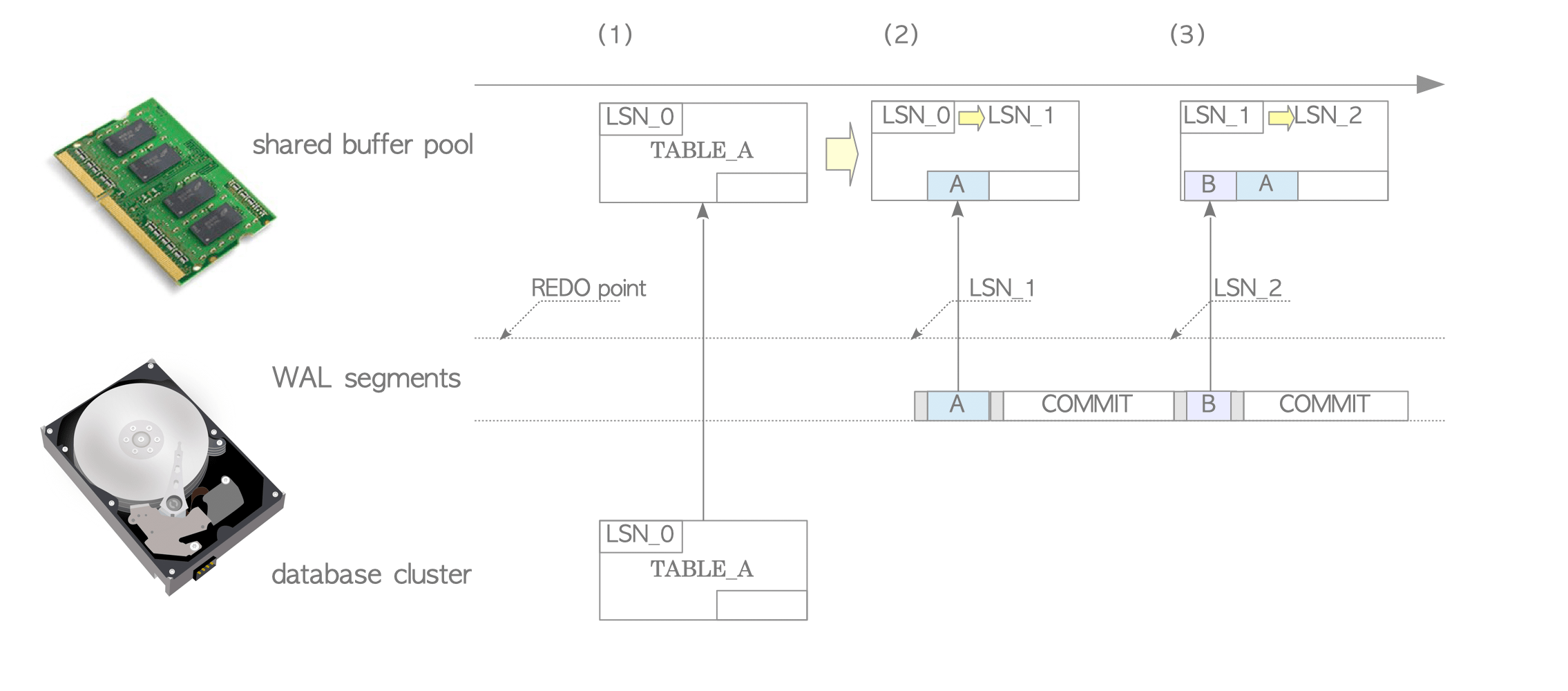

The following instructions show how to recover our database cluster back to the state immediately before the crash. There is no need to do anything special, since PostgreSQL will automatically enter recovery-mode by restarting. See Figure 9.3. PostgreSQL will sequentially read and replay XLOG records within the appropriate WAL segment files from the REDO point.

Figure 9.3. Database recovery using WAL.

-

PostgreSQL reads the XLOG record of the first INSERT statement from the appropriate WAL segment file, and loads the TABLE_A page from the database cluster into the shared buffer pool.

-

Before trying to replay the XLOG record, PostgreSQL compares the XLOG record’s LSN with the corresponding page’s LSN. the reason for doing this will be described in Section 9.8. The rules for replaying XLOG records are as follows:

If the XLOG record’s LSN is larger than the page’s LSN, the data-portion of the XLOG record is inserted into the page, and the page’s LSN is updated to the XLOG record’s LSN.

On the other hand, if the XLOG record’s LSN is smaller, there is nothing to do other than to read next WAL record.

In this example, the XLOG record is replayed since the XLOG record’s LSN (LSN_1) is larger than the TABLE_A’s LSN (LSN_0). Then, TABLE_A’s LSN is updated from LSN_0 to LSN_1. -

PostgreSQL replays the remaining XLOG record(s) in the same way.

PostgreSQL can recover itself in this way by replaying XLOG records written in WAL segment files in chronological order. Thus, PostgreSQL’s XLOG records are REDO log.

PostgreSQL does not support UNDO log.

Although writing XLOG records certainly costs a certain amount, it is nothing compared to writing the entire modified pages. We are confident that the benefit we gain, namely system failure tolerance, is greater than the amount we pay.

9.1.3. Full-Page Writes

Suppose that the TABLE_A’s page data on the storage is corrupted because the operating system has failed while the background writer process has been writing the dirty pages. As XLOG records cannot be replayed on the corrupted page, we would need an additional feature.

PostgreSQL supports a feature called full-page writes to deal with such failures. If it is enabled, PostgreSQL writes a pair of the header data and the entire page as an XLOG record during the first change of each page after every checkpoint. (This is the default setting.) In PostgreSQL, such a XLOG record containing the entire page is called a backup block (or full-page image).

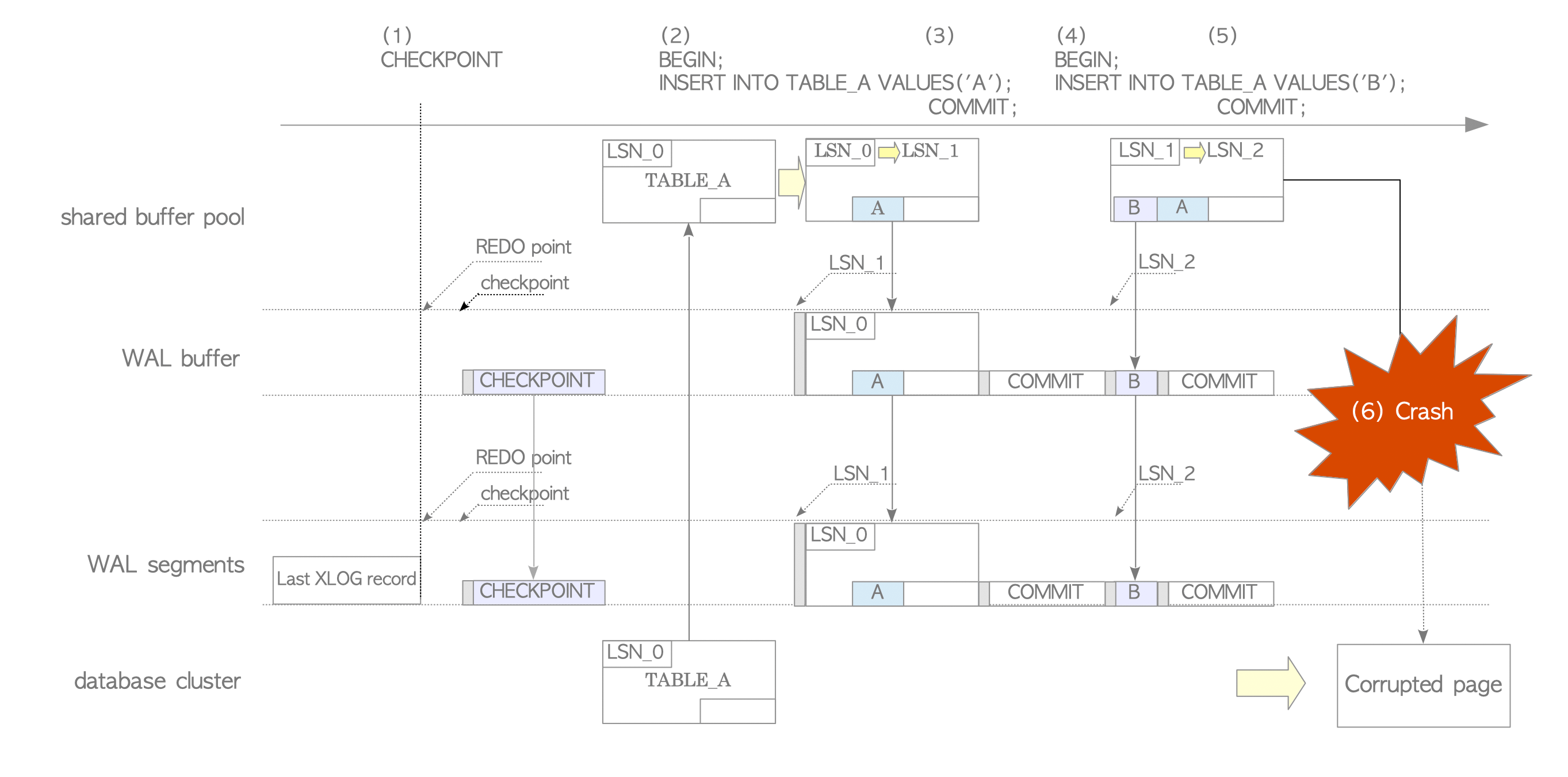

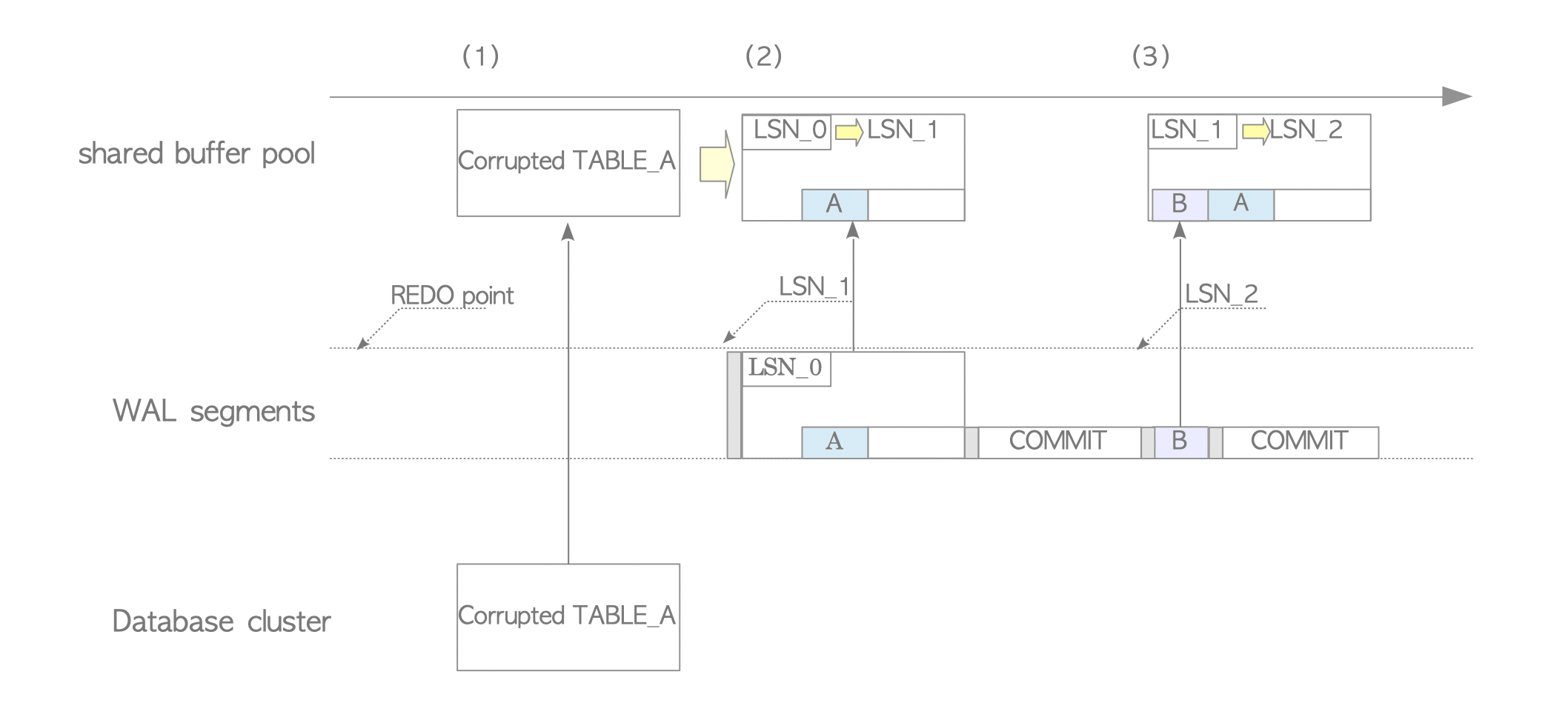

Let’s describe the insertion of tuples again, but with full-page writes enabled. See Figure 9.4 and the following description.

Figure 9.4. Full page writes.

-

The checkpointer starts a checkpoint process.

-

When we insert the first INSERT statement, PostgreSQL operates in the same way as in the previous subsection, except that this XLOG record is the backup block of this page, because this is the first writing of this page after the latest checkpoint. (In other words, it contains the entire page.)

-

As this transaction commits, PostgreSQL operates in the same way as in the previous subsection.

-

When we insert the second INSERT statement, PostgreSQL operates in the same way as in the previous subsection, since this XLOG record is not a backup block.

-

When this statement’s transaction commits, PostgreSQL operates in the same way as in the previous subsection.

-

To demonstrate the effectiveness of full-page writes, let’s consider the case in which the TABLE_A page on the storage has been corrupted due to an operating system failure that occurred while the background writer was writing it to the storage (HDD or SSD).

Restart the PostgreSQL server to repair the broken cluster. See Figure 9.5 and the following description.

Figure 9.5. Database recovery with backup block.

-

PostgreSQL reads the XLOG record of the first INSERT statement and loads the corrupted TABLE_A page from the database cluster into the shared buffer pool. In this example, the XLOG record is a backup block, because the first XLOG record of each page is always its backup block according to the writing rule of full-page writes.

-

When a XLOG record is its backup block, another rule of replaying is applied: the record’s data-portion (i.e., the page itself) is to be overwritten onto the page regardless of the values of both LSNs, and the page’s LSN updated to the XLOG record’s LSN.

In this example, PostgreSQL overwrites the data-portion of the record to the corrupted page, and updates the TABLE_A’s LSN to LSN_1. In this way, the corrupted page is restored by its backup block. -

Since the second XLOG record is a non-backup block, PostgreSQL operates in the same way as the instruction in the previous subsection.

In this way, PostgreSQL can recover the database even if some data write errors occur due to a process or operating system crash.

As mentioned above, WAL can prevent data loss due to process or operating system crashes. However, if a file system or media failure occurs, the data will be lost. To deal with such failures, PostgreSQL provides online backup and replication features.

If online backups are taken regularly, the database can be restored from the most recent backup, even if a media failure occurs. However, it is important to note that the changes made after taking the last backup cannot be restored.

The synchronous replication feature can store all changes to another storage or host in real time. This means that if a media failure occurs on the primary server, the data can be restored from the secondary server.

No. Synchronous replication does not eliminate the need for backups.

Operating a DBMS requires addressing not only hardware or software failures but also data loss and errors caused by human mistakes or software bugs.

For instance, if critical data is accidentally deleted, the deletion is immediately reflected on the replicated standby server. Similarly, if erroneous data is written due to a mistake, the error propagates instantly across all replication servers.

In such cases, replication cannot restore the lost or corrupted data. The only way to recover from these critical failures is by using backup data (+ archive logs).