18. Related Topics

This chapter delves into the evolution of the Transformer model, exploring its potential applications beyond natural language processing.

18.1. Large Language Models (LLMs)

18.2. Computer Vision

18.3. Time-series Analysis

18.4. Reinforcement Learning

18.5. Transformer Alternatives

18.1. Large Language Models (LLMs)

LLMs, a type of AI trained on massive amounts of text data, learn the patterns of human language and generate human-quality text. They have already caused a significant impact on society.

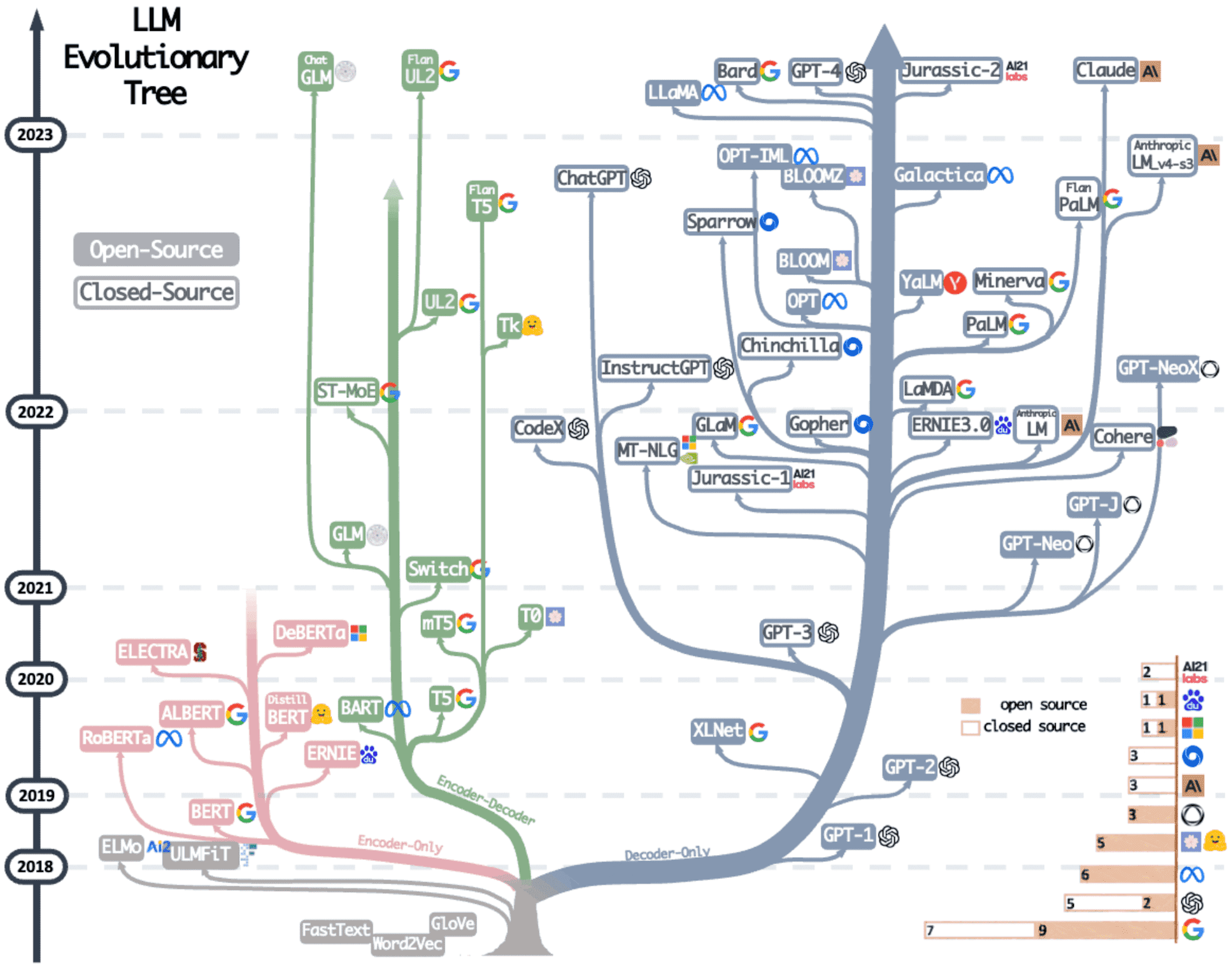

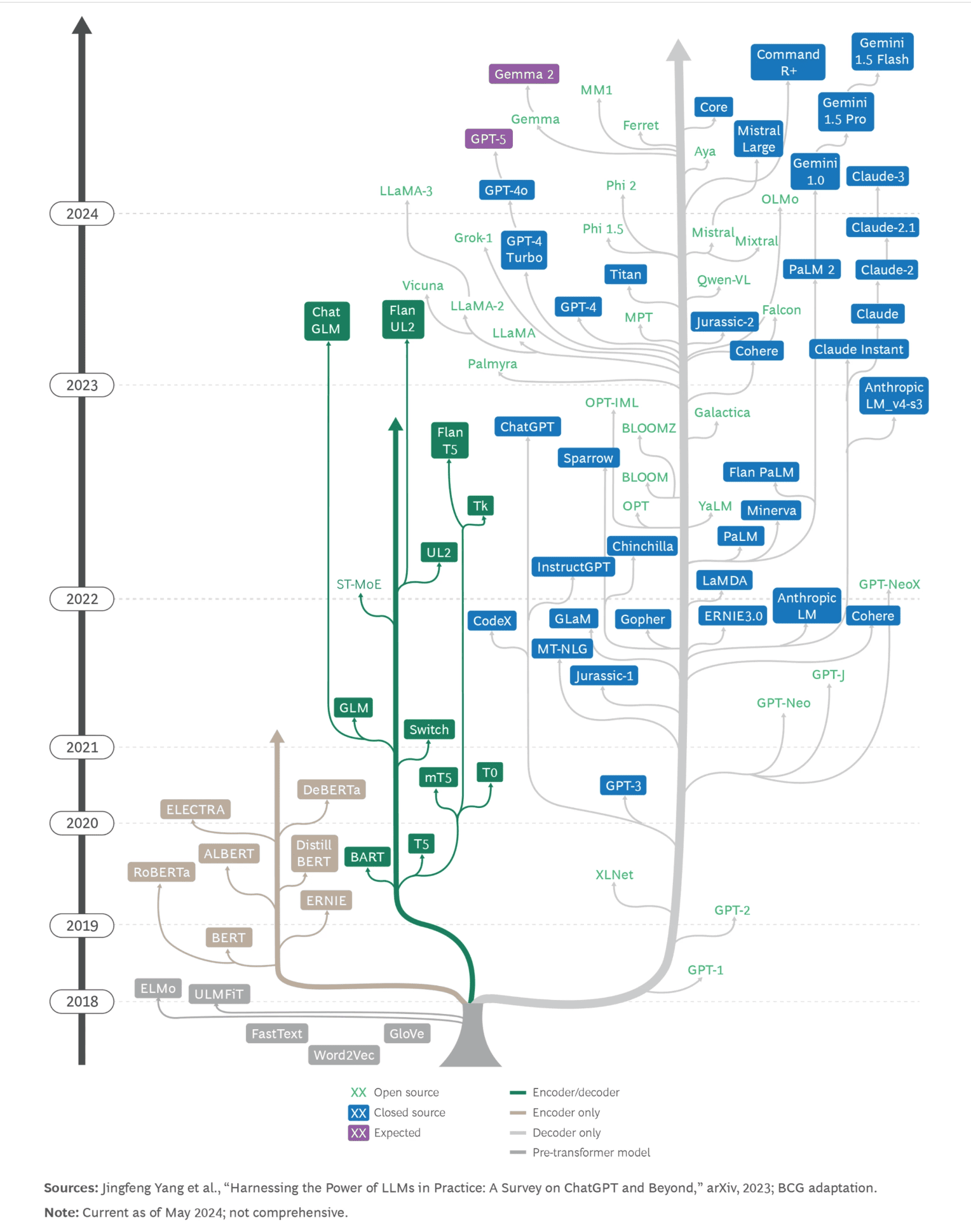

According to the paper “Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond”, three main streams of LLM technology trends exist:

- Encoder-Only

- Encoder-Decoder

- Decoder-Only

See the following figures cited from the paper (by J. Yang, et al.) and article (by Boston Consulting Group):

Refer to the original figure for details.

Nearly all of these trends are rooted in the Transformer model1. For example, BERT leverages only the encoder component of the Transformer model, while GPT2 solely employs the decoder component.

Currently, the most powerful models are commercially developed. However, numerous open-source models have also been released. Additionally, several open-source models, e.g. ollama, are available that can be run on personal computers or other consumer hardware.

-

A Survey on Large Language Models with some Insights on their Capabilities and Limitations (v1: 3.Jan.2025)

-

Large Language Models: A Survey (v1: 9.Feb.2024, v2: 20.Feb.2024)

-

Large Language Models for Software Engineering: Survey and Open Problems (v1: 21.Aug.2023, v6: 10.Apr.2024)

-

A Survey of Large Language Models (v1: 31.Mar.2023, v15: 13.Oct.2024)

-

A Survey on Evaluation of Large Language Model (v1: 6.Jul.2023, v9: 29.Dec.2023)

-

Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond (v1: 26.Apr.2023, v2: 27.Apr.2023)

-

Transformer models: an introduction and catalog (v1: 12.Feb.2023, v4: 31.Mar.2024)

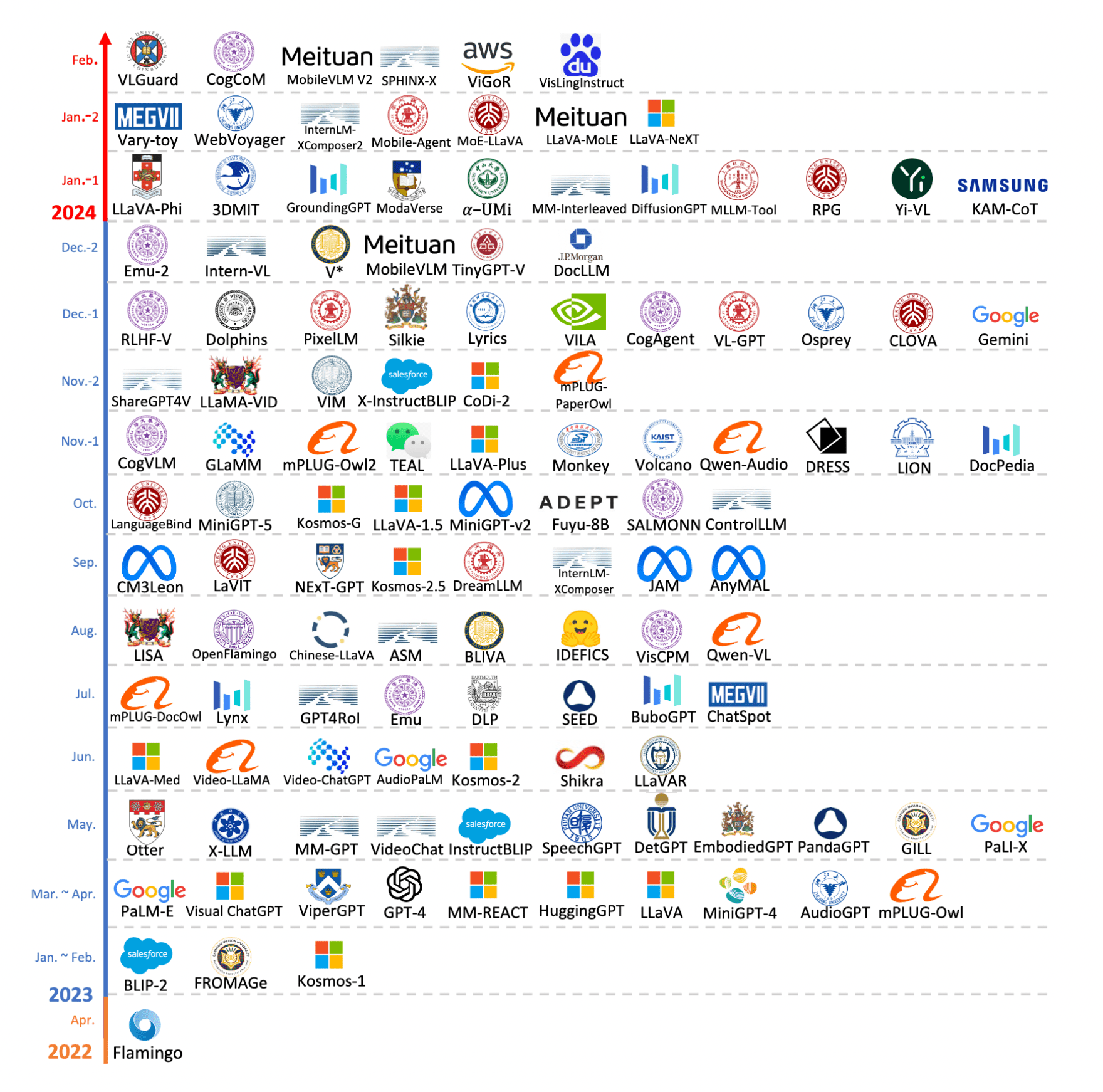

18.1.1. MultiModal Large Language Models

Research publications on multimodal LLMs have seen a significant surge since 2023.

The following figure is cited from the survey:

- MM-LLMs: Recent Advances in MultiModal Large Language Models (v1: 24.Jan.2024, v5: 28.May.2024)

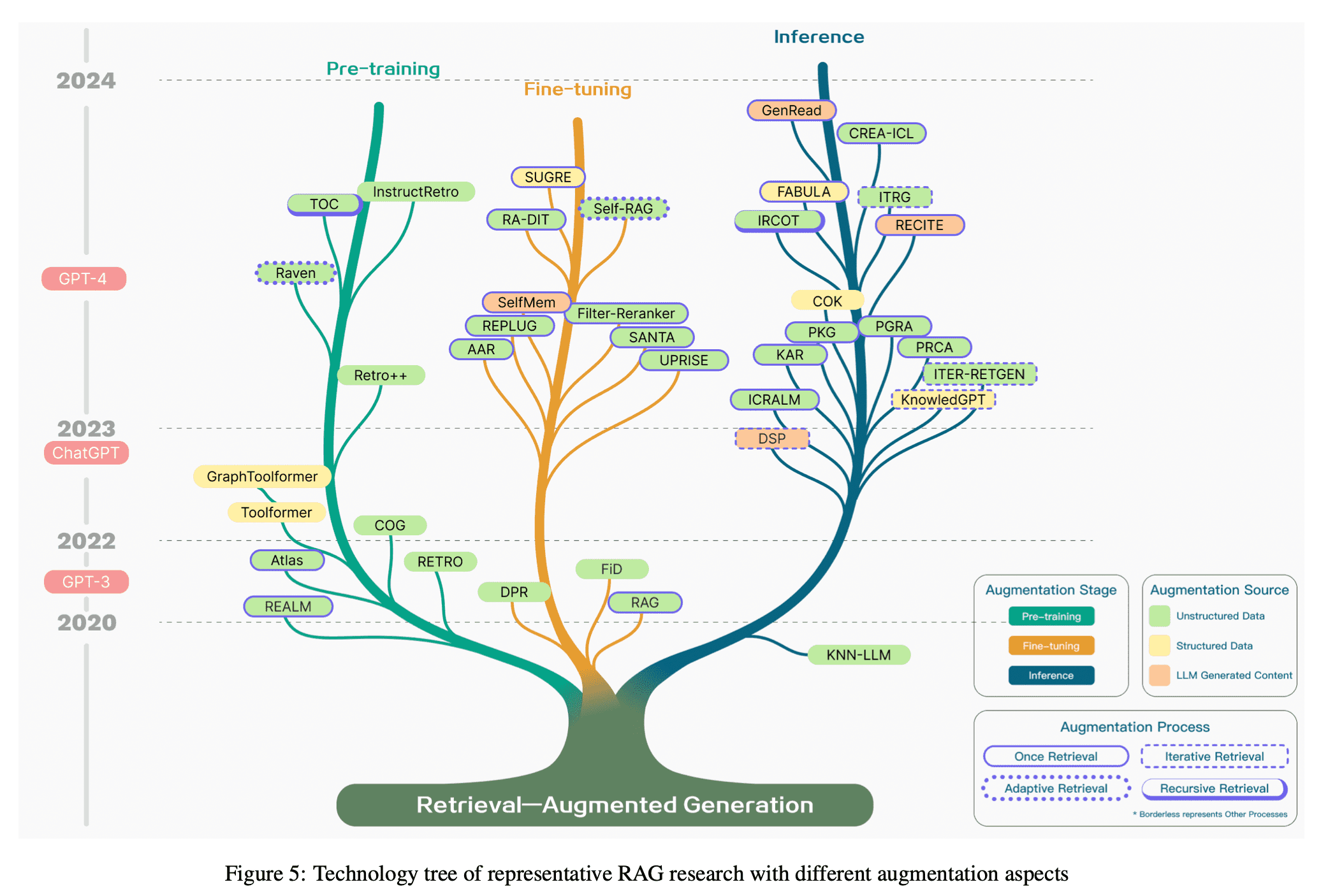

18.1.2. Retrieval-Augmented Generation (RAG) for LLMs

RAG is a technique used to enhance the quality and reliability of text generated by LLMs by incorporating factual information retrieved from external knowledge sources.

The following figure is cited from the survey:

- Retrieval-Augmented Generation for Large Language Models: A Survey (v1: 18.Dec.2023, v5: 27.Mar.2024)

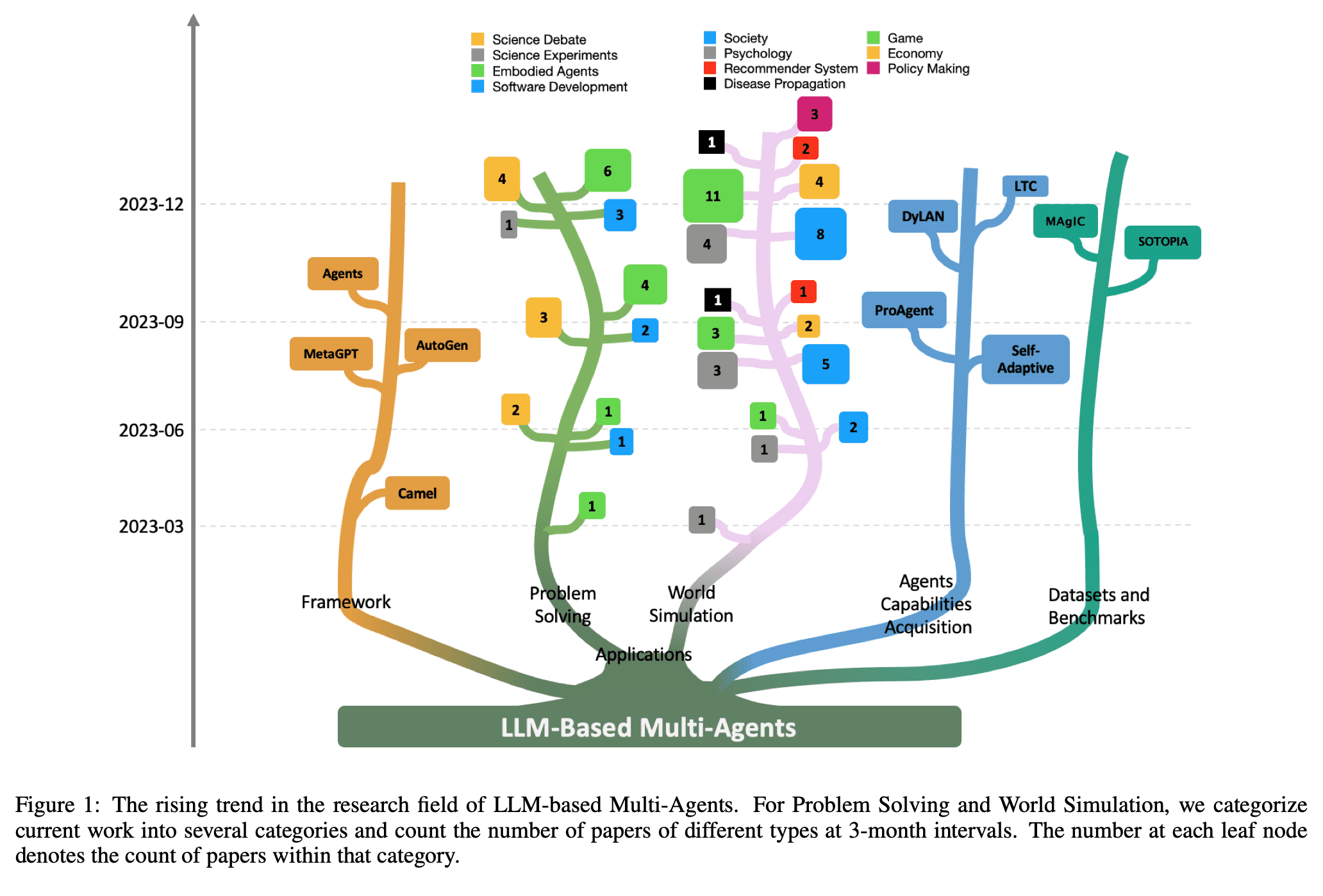

18.1.3. LLM based Multi-Agents

As LLMs evolved, systems have been proposed in which multiple LLMs work together to complete tasks, rather than a single LLM performing all task processing. A major approach is multi-agent based LLMs.

Early LLM-based multi-agent systems were straightforward: a coordinator managed multiple expert agents who could answer questions within their specific domains.

However, LLM-based multi-agent systems are rapidly being improved. For example, multiple agents (LLMs) are now able to debate together to resolve given tasks.

Moreover, to improve the discussion processes, reinforcement learning techniques are leveraged, just as reinforcement learning surprisingly improved machine game-playing, its various techniques are be applied to improve the discussion skills of agents in LLM-based multi-agent systems.

I believe that by applying these techniques, machines can eventually acquire human-like thinking methods, such as chain-of-thought reasoning.

-

Large Language Model based Multi-Agents: A Survey of Progress and Challenges (v1: 21.Jan.2024, v2: 19.Apr.2024)

-

LLM-based Multi-Agent Reinforcement Learning: Current and Future Directions (17.May.2024)

-

From LLMs to LLM-based Agents for Software Engineering: A Survey of Current, Challenges and Future (5.Aug.2024)

-

A survey on large language model based autonomous agents (22.Mar.2024)

-

A Survey on Recent Advances in LLM-Based Multi-turn Dialogue Systems (28.Feb.2024)

18.1.4. LLM for Site Reliability Engineering (SRE)

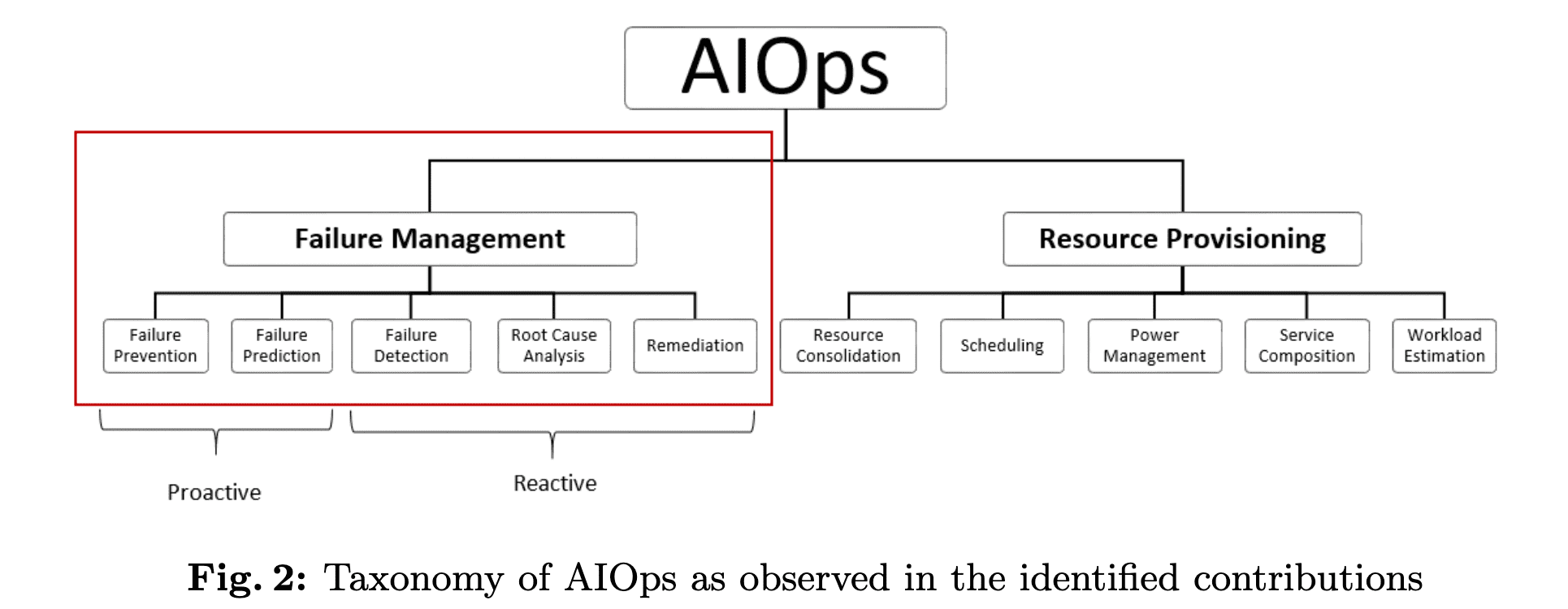

Studies on AIOps, Artificial Intelligence for IT Operations, have been conducted since the relatively early days in the IT operation field3. These studies have focused on areas such as failure management and resource provisioning, mainly using traditional machine learning methods like anomaly detection and root cause analysis.

Since 2023, the AIOps field has seen the introduction of LLM techniques.

While research with LLMs in this area is still in its early stages, I believe AI has the power to transform the field of Site Reliability Engineering (SRE) from a discipline heavily reliant on individual skills to one that incorporates more engineering and scientific principles.

-

Commentary Article:

-

AIOps

-

LLM for SRE/DBA

- RCAgent: Cloud Root Cause Analysis by Autonomous Agents with Tool-Augmented Large Language Models (v1: 25.Oct.2023, v3: 2.Aug.2024)

- D-Bot: Database Diagnosis System using Large Language Models (v1: 3.Dec.2023, v2: 6.Dec.2023)

- Panda: Performance Debugging for Databases using LLM Agents

- LLM As DBA (v1: 10.Aug.2023, v2: 11.Aug.2023)

- DB-GPT: Large Language Model Meets Database (19.Jan.2024)

-

LLM for Cardinality Estimation

- A Survey of Query Optimization in Large Language Models (v1: 23.Dec.2024)

- Can Large Language Models Be Query Optimizer for Relational Databases? (v1: 8.Feb.2025)

- Optimizing LLM Queries in Relational Data Analytics Workloads (v1: 9.Mar.2024, v2: 9.Apr.2025)

- Training-Free Query Optimization via LLM-Based Plan Similarity (v1: 6.Jun.2025)

- A Query Optimization Method Utilizing Large Language Models (v1: 10.Mar.2025)

- OPTIMIZING LLM QUERIES IN RELATIONAL DATA ANALYTICS WORKLOADS

- The Unreasonable Effectiveness of LLMs for Query Optimization (v1: 5.Nov.2024)

18.2. Computer Vision

Similar to the shift from RNNs to Transformers in NLP, computer vision has seen a transition from convolutional neural network (CNN) architectures to transformer-based architectures with the introduction of Vision Transformer (ViT) in 2020.

- A survey of the Vision Transformers and its CNN-Transformer based Variants (v1: 17.May.2023, v4: 27.Jul.2024)

- A Survey on Visual Transformer (v1: 23 Dec 2020, v6: 10.Jul.2023)

- Vision Language Transformers: A Survey (v1: 6.Jul.2023)

- Transformer-based Generative Adversarial Networks in Computer Vision: A Comprehensive Survey (v1: 17.Feb.2023)

18.3. Time-series Analysis

Since sentences in natural language can be viewed as a type of time series data, it is natural to consider applying the Transformer model, originally introduced for NLP, to traditional time series analysis domains.

Additionally, a paper exploring time series analysis using Large Language Models (LLMs) was published in 2024.

-

Transformer

-

Transformers in Time Series: A Survey (v1: 15.Feb.2022, v5: 11.May.2023)

-

A Survey on Time-Series Pre-Trained Models (v1: 18.May.2023, v2: 4.Oct.2024)

-

Long-Range Transformers for Dynamic Spatiotemporal Forecasting (v1: 24.Sep.2021, v3: 18.Mar.2023)

- github:spacetimeformer

-

Timer: Generative Pre-trained Transformers Are Large Time Series Models (v1:4.Feb.2024, v3: 18.Oct.2024)

- github:Large-Time-Series-Model

-

Gateformer: Advancing Multivariate Time Series Forecasting through Temporal and Variate-Wise Attention with Gated Representations (v1:1.May.2025, v2: 9.May.2025)

- github:Gateformer

-

A decoder-only foundation model for time-series forecasting (v1: 14.Oct.2023, v4: 17.Apr.2024)

- github:TimesFM

-

Unified Training of Universal Time Series Forecasting Transformers (v1: 4.Feb.2024, v2: 22.May.2024)

- github:Uni2TS

-

Chronos: Learning the Language of Time Series (v1: 12.Mar.2024, v3: 4.Nov.2024)

- github:chronos-forecasting

-

TimeGPT-1 (v1: 5.Oct.2023, v3: 27.May.2024)

- github:nixtlar

-

Time-LLM: Time Series Forecasting by Reprogramming Large Language Models (Published: 16.Jan.2024, Last Modified: 8.Apr.2024)

- github:Time-LLM

-

-

LLM

- Position Paper: What Can Large Language Models Tell Us about Time Series Analysis (v1: 5.Feb.2024, v2: 1.Jun.2024)

IMO: While Transformer and LLM analyses show promise, their lack of interpretability is a concern. Traditional time series analysis methods, on the other hand, provide valuable insights based on a strong mathematical foundation. In my opinion, we shouldn’t abandon traditional methods just yet.

18.4. Reinforcement Learning

The “third golden age” of AI arrived with breakthroughs like Deep Q-Networks (DQNs) conquering Atari games and AlphaGo defeating the Go world champion. These achievements popularized the term “Deep Learning.”

While these achievements are rooted in the field of reinforcement learning (RL), the impact of Transformers is starting to influence RL research as well.

Here are two notable examples:

- Decision Transformer (2021)

This model tackles offline RL4, where the agent cannot interact with the environment during training. It leverages Transformer architecture to analyze past experiences and make optimal decisions in unseen situations.

- MAT: Multi-Agent Transformer (2022)

MAT addresses the complexities of situations where multiple agents interact and compete or cooperate. This model utilizes Transformer mechanisms to capture the complex dynamics and dependencies between agents.

-

Paper

- Decision Transformer: Reinforcement Learning via Sequence Modeling (v1: 2.Jun.2021, v2: 24.Jun.2021)

- Multi-Agent Reinforcement Learning is a Sequence Modeling Problem (v1: 30.May.2022, v3: 28.Oct.2022)

-

Survey

- Transformers in Reinforcement Learning: A Survey (12.Jul.2023)

- Transformer in Reinforcement Learning for Decision-Making: A Survey (30.Oct.2023)

18.5. Transformer Alternatives

Many alternative models to the Transformer are continuously being proposed.

Here are some recent examples:

- Synthesizer: Rethinking Self-Attention in Transformer Models (v1: 2.May.2020, v3: 24.May.2021)

- FNet: Mixing Tokens with Fourier Transforms (v1: 9.May.2021, v4: 26.May.2022)

- Are Pre-trained Convolutions Better than Pre-trained Transformers? (v1: 7.May.2021, v2: 30.Jan.2022)

- Efficiently Modeling Long Sequences with Structured State Spaces (v1: 31.Oct.2021, v3: 5.Aug.2022)

- RWKV: Reinventing RNNs for the Transformer Era (v1: 22.May.2023, v2: 11.Dec.2023)

- (RetNet) Retentive Network: A Successor to Transformer for Large Language Models (v1: 17.Jul.2023, v4: 9.Aug.2023)

- Mamba: Linear-Time Sequence Modeling with Selective State Spaces (v1: 1.Dec.2023, v2: 31.may.2024)

- KAN: Kolmogorov-Arnold Networks (v1: 30.Apr.2024, v4: 16.Jun.2024)

- xLSTM: Extended Long Short-Term Memory (v1: 7 May 2024, v2: 6.Dec.2024)

- nGPT: Normalized Transformer with Representation Learning on the Hypersphere (v1: 1.Oct.2024)

- (minLSTMs and minGRUs) Were RNNs All We Needed? (v1: 2.Oct.2024, v3: 28.Nov.2024)

- Wave Network: An Ultra-Small Language Model (v1: 4.Nov.2024, v4: 11.Nov.2024)

- Titans: Learning to Memorize at Test Time (v1: 31.Dec.2024)

- $\text{Transformer}^{2}$ : Self-adaptive LLMs (v1: 9.Jan.2025, v2: 14.Jan.2025)

- Large Language Diffusion Models (v1: 14.Feb.2025, v2: 18.Feb.2025)

While these alternatives are not yet mainstream, it does not imply their inferiority to the Transformer.

Currently, research resources primarily focus on the Transformer and its variants. However, if one of these alternatives or a future model proves significantly more effective than the Transformer, it could potentially become the dominant architecture.

-

ELMo is LSTM-based model. In commercial models, it is impossible to say definitively whether they are transformer-based, as some do not disclose details about their internal architecture. ↩︎

-

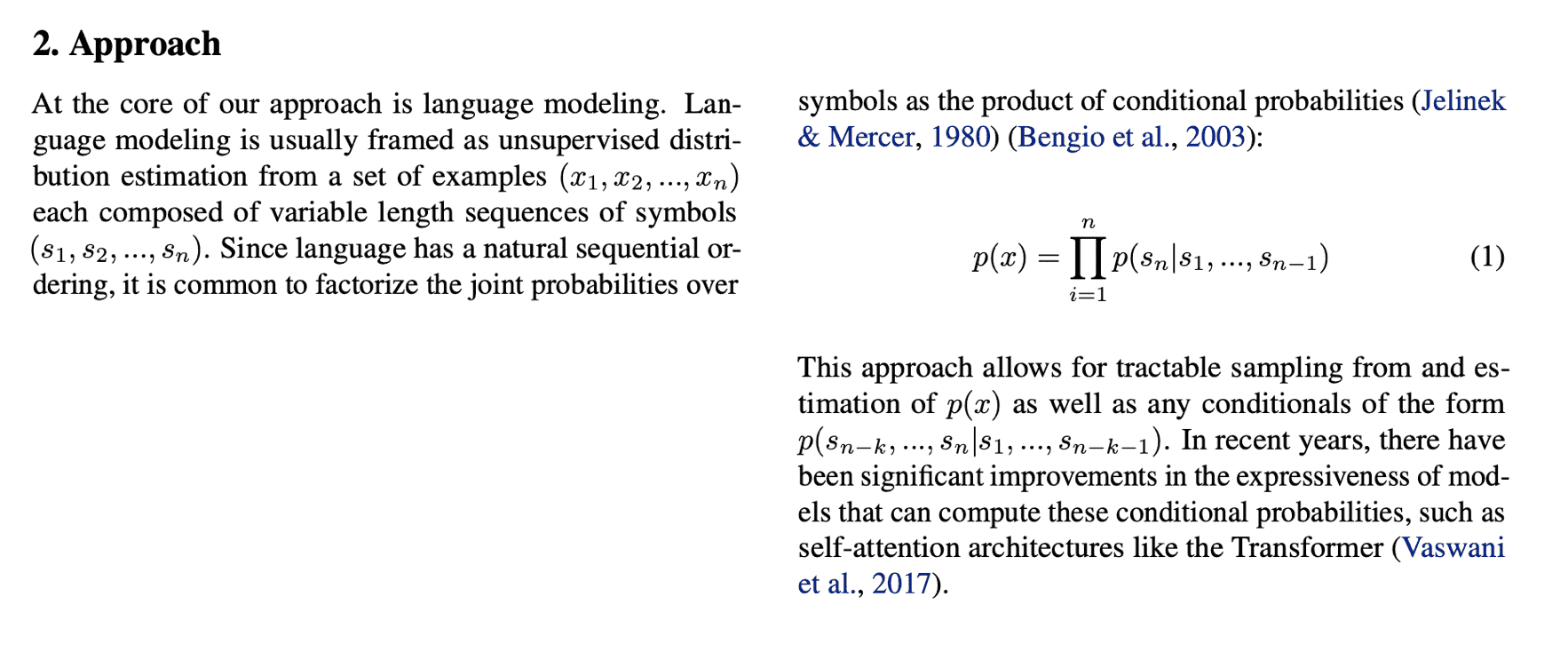

It’s fascinating to me that GPT-2, a decoder built from Transformer modules, presents only one formula in its paper:

This is a familiar representation of language models for us.

This is a familiar representation of language models for us.

It reveals that N-gram, RNN-based language models, and LLMs all share the foundation of statistical language models, even though LLMs appear like technology from another world or magic, much like how DNA forms the basis for all life, from bacteria to human being. ↩︎ -

As a database engineer, I initially became interested in Deep Learning (and Reinforcement Learning) to explore the potential of applying LLMs to system administration tasks. ↩︎

-

Online reinforcement learning agents interact with an environment in real-time. For example, in online learning, an AI agent plays the game of Go in real-time, adjusting its strategy based on the outcomes of each move during the game.

In contrast, offline reinforcement learning uses a fixed dataset of pre-collected experiences to train the agent, such as learning from a historical dataset of Go games. This is useful for complex or costly environments where direct interaction is impractical, like optimizing industrial processes based on historical data. ↩︎