8. Long Short-Term Memory: LSTM

LSTM (Long Short-Term Memory), invented in 1997 by S. Hochreiter and J. Schmidhuber, is a type of recurrent neural network (RNN) specifically designed to handle long-term dependencies in sequences.

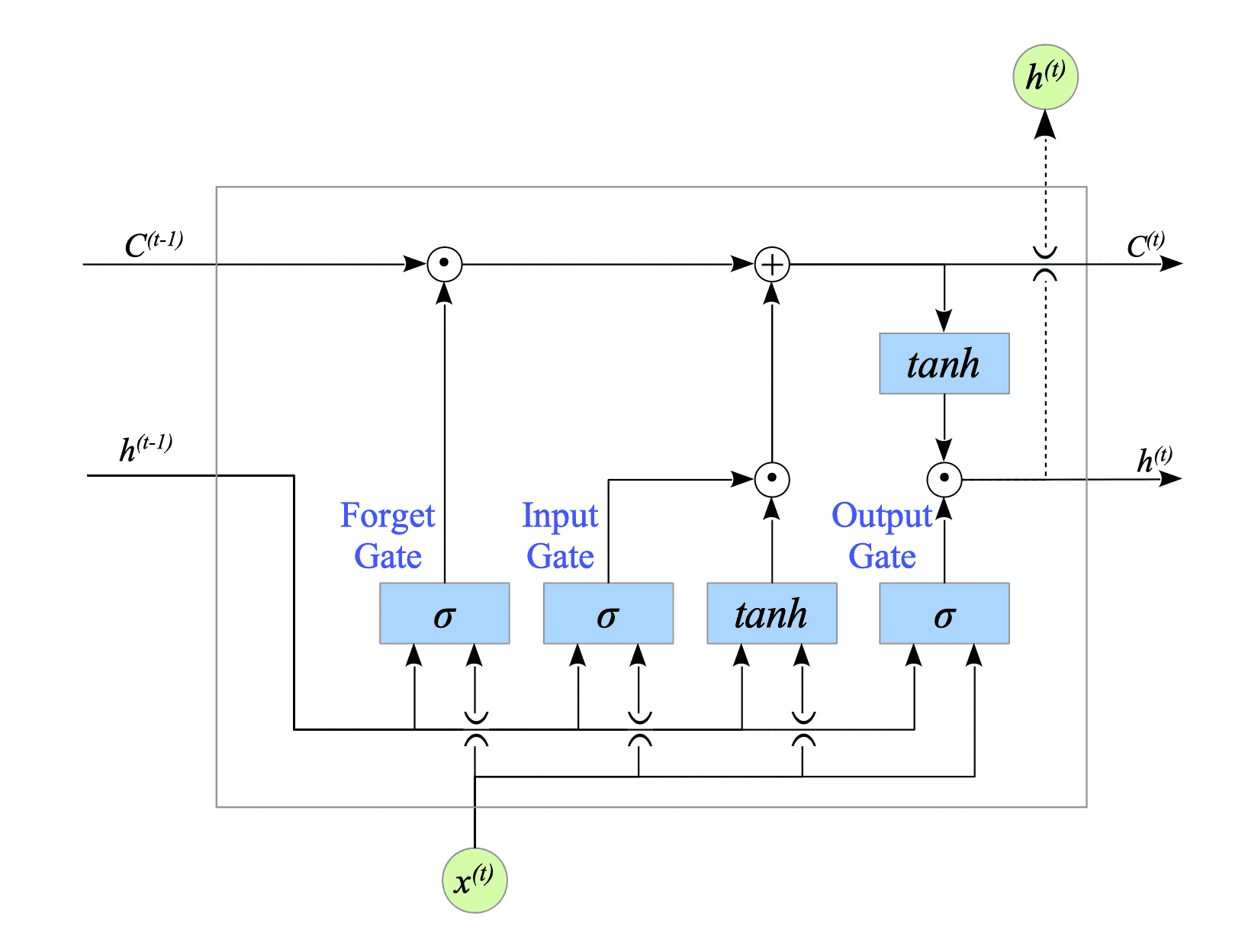

LSTM networks work by using a special type of weights and biases that can store information for long periods of time. These weights and biases are controlled by three gates: the input gate, forget gate, and output gate.

- The input gate controls how much new information is added to the weights and biases.

- The forget gate controls how much information is removed from the weights and biases.

- The output gate controls how much information from the weights and biases is used by the LSTM network.

Fig.8-1: LSTM Architecture

There is a lot of good documentation available for a detailed explanation of LSTM networks, such as:

While many resources cover LSTM architecture, I have not found a clear and concise explanation of LSTM backpropagation. Therefore, the following sections will delve into the backpropagation process and LSTM implementation in detail.

Chapter Contents