9. Gated Recurrent Unit: GRU

GRU (Gated Recurrent Unit), invented in 2014 by K. Cho et al., is similar to LSTM networks, but with a simpler architecture.

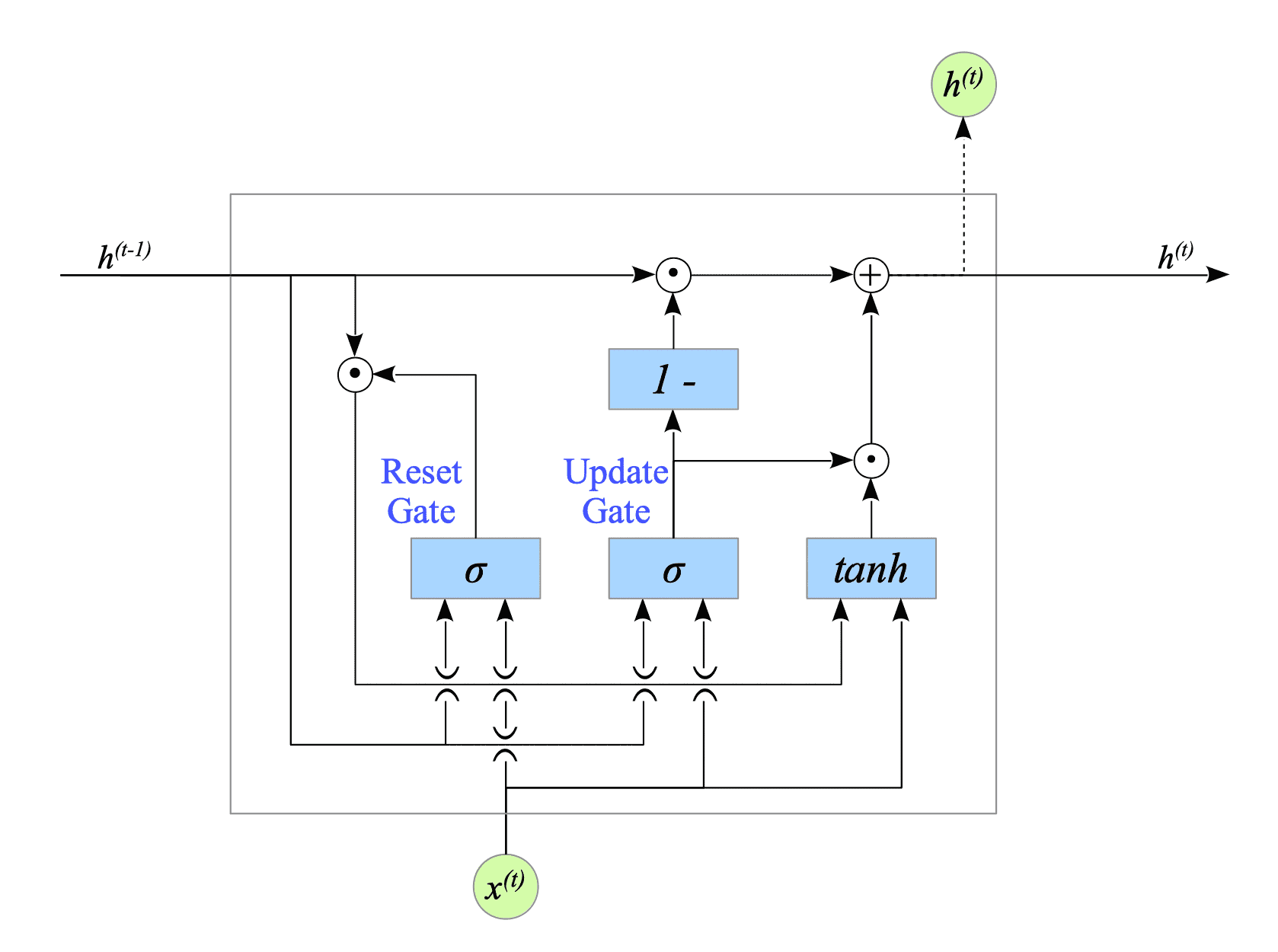

GRU networks work by using a special type of weights and biases that are controlled by two gates: the update gate and the reset gate.

- The update gate controls how much information from the previous time step is used to update the weights and biases.

- The reset gate controls how much the weights and biases are reset to their initial states.

Fig.9-1: GRU Architecture

For a detailed explanation of GRU, see the following documents:

As with the LSTM part, the following sections will delve into the backpropagation process and GRU implementation in detail.

Chapter Contents